Visualize the Insides of a Neural Network

To understand the inner working of a trained image classification network, one can try to visualize the image features that the neurons within the network respond to.

Load a pre-trained network.

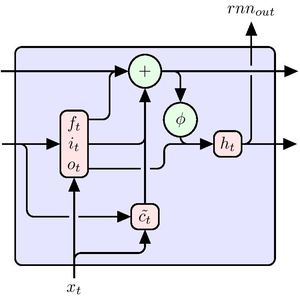

The image features of the neurons in the first convolution layer are simply given by their convolution kernels.

These neurons encode low-level features, such as edges and lines of grayscale and color with varying orientations.

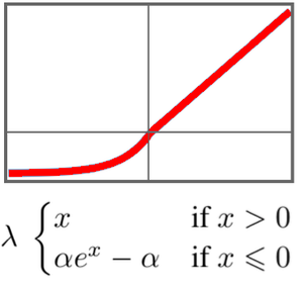

However, this simple approach does not work for neurons in layers that are further down the processing chain of the network. You can therefore utilize Google’s Deep Dream algorithm to generate neural features in a random input image.

First, specify a layer and feature that you would like to visualize.

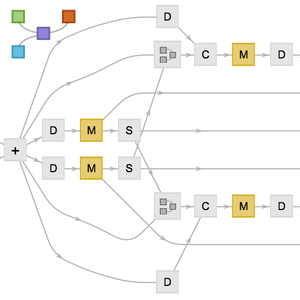

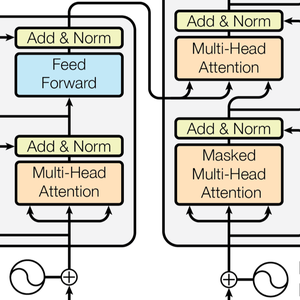

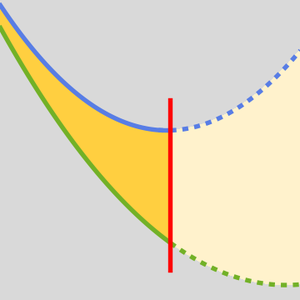

To obtain an impression of what a neuron encodes, truncate the network at the given layer and attach layers that extract the total square activity of the specified neurons.

Maximizing the output of this network by constantly updating a random input image with the networks backpropagation gradient results in an image that primarily excites the neuron in question. The resulting image thus exhibits the features to which the neuron responds.

Create an initial image that exhibits randomness at all scales.

The NetPortGradient["Input"] command provides the backpropagation gradient at the input port of the network. The following code normalizes the maximum gradient coefficient to 1/8 and converts it into an image.

Before iteratively updating the image with the preceding gradient image, you need to introduce a jitter function that shifts an image by a small offset.

Incorporating a random jitter into the following loop helps to spread out local artifacts of the backpropagation and promotes a more regularized image.

Updating the initial, random image 256 times returns the following image. The neurons in question seem to encode small birds or chickens.

Feel free to repeat the above procedure for other features in other layers. You will note that neurons in shallow layers encode simple feature, such as textures, whereas neurons in deep layers encode more complex features, such as animal faces.

Features of inception layer 3b.

Features of inception layer 4d.