Neural Network Sensitivity Map

Just like humans, neural networks have a tendency to cheat or fail. For example, if one trains a network on animal images and if all the "wolf" images exhibit snow in the background, then the snow becomes a "wolf" feature. Hence, the network uses the snow feature as a shortcut and may not have learned anything about the appearance of a wolf. As a consequence, a deer in snowy conditions may be misclassified as a wolf.

To better understand any misclassification and to verify if a classification network has learned the desired features, the following analysis is of interest.

Classify an arbitrary image and note the classification probability. Then, cover parts of the image and note if the classification probability goes up or down. If the probability goes down, the covered area contains features that support the classification. If the probability goes up, the covered area contains features that inhibit the classification.

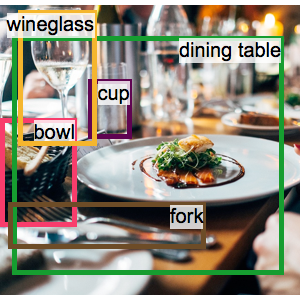

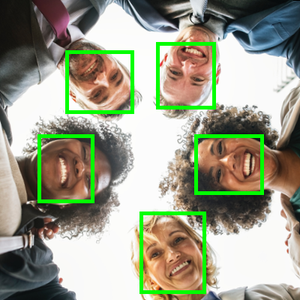

Load an image identification neural network.

Define a function that bleaches part of an image to a neutral color. The second argument specifies the location of the bleach in scaled coordinates, and the third argument determines the size of the cover as a fraction of image size.

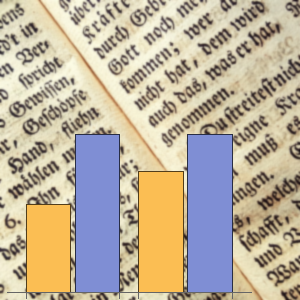

Now another function can be defined that systematically covers parts of the image to show the effect on the classification probability of the specified neural network. The resulting sensitivity map is displayed as brightness in the output image.

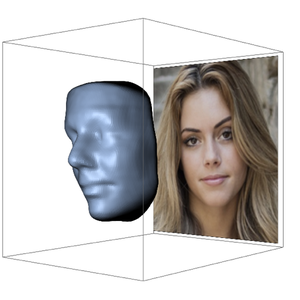

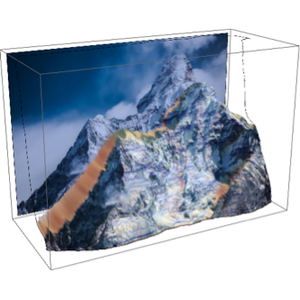

Generate the sensitivity map of a horse classified as a Morgan with 74% probability.

Create a scale-independent sensitivity map by averaging over different cover sizes.

A wolf classification with a high probability and a focused sensitivity map.

Scale-independent sensitivity map of the wolf.

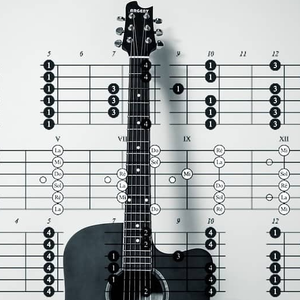

Here is a misclassification of a porcelain wolf as a soap dish due to the figure stand.

A different network produces another misclassification by focusing on the figure's back, which looks like the back of a dinosaur.